Let's chat about programming with LangChainJS and Ollama

And this is still happening on a Pi 5 (and propelled by 🐳 Docker Compose)

I feel much more comfortable with JavaScript than with Python (all the previous blog posts of this series "speak Python"). Also, I was thrilled when I discovered these two essential things:

There is an Ollama JavaScript library's API designed around the Ollama REST API.

There is a JavaScript version of LangChain: LangChainJS.

So, I added to the Pi GenAI Stack project a JavaScript development environment with a WebIDE and all the necessary dependencies to start experimenting.

Don't forget to git pull the last version

To start the JavaScript Dev environment, type the following command:

docker compose --profile javascript up

That means you can run the Python Dev environment with the following command:

docker compose --profile python up

Once the stack is started, you can connect to the WebIDE with http://<dns_name_of_the_pi_or_IP_address>:3001 or http://localhost:3001 if you are working directly on your Pi.

First Contact: Ollama JavaScript Library

Once the Web IDE is opened, create a directory with a new file index.mjs and add the below content in this file:

import {Ollama} from 'ollama'

let ollama_base_url = process.env.OLLAMA_BASE_URL

let ollama = new Ollama({

host: ollama_base_url

})

const response = await ollama.chat({

model: 'deepseek-coder',

messages: [{

role: 'user',

content: 'what is rustlang?'

}],

stream: true

})

for await (const part of response) {

process.stdout.write(part.message.content)

}

Steps:

Importing the Ollama Library:

import {Ollama} from 'ollama'Create an instance of the Ollama helper:

let ollama = new Ollama(...)Then, "chat" with the LLM:

ollama.chat({...})When we call the API with

ollama.chat, we set thestreamfield totrueThen, we can display the results progressively into the terminal with aforloop.

Run the script like this:

node index.mjs

I use the

.mjsextension with Node.js to explicitly tell it that the file contains code written in the ECMAScript Modules (ESM) format.

This is the result:

It's time to look to LangChainJS to benefit from many great features.

Ask questions with the help of LangChain

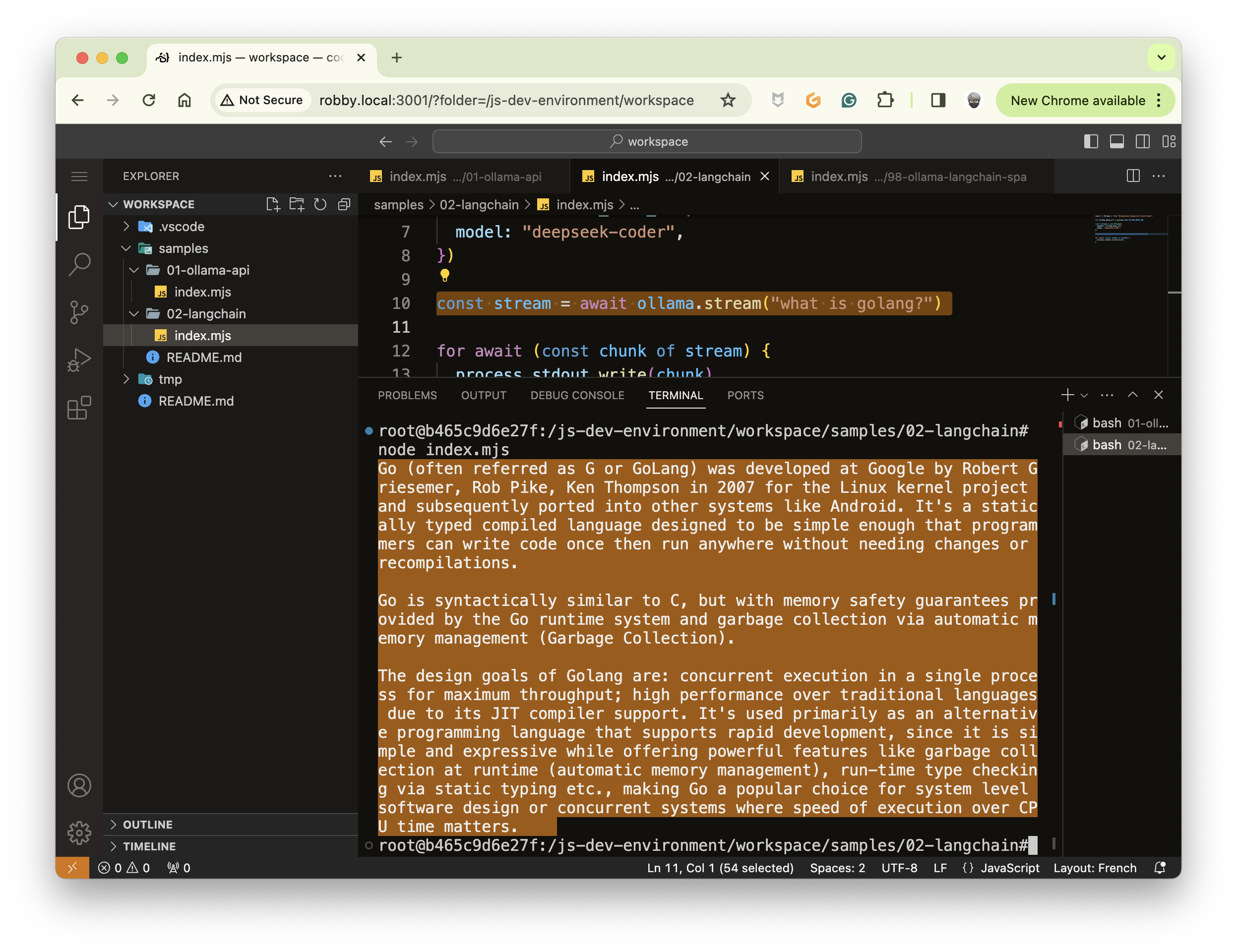

Create a second directory with a new file index.mjs and add the below content in this file:

import { Ollama } from "@langchain/community/llms/ollama";

let ollama_base_url = process.env.OLLAMA_BASE_URL

const ollama = new Ollama({

baseUrl: ollama_base_url,

model: "deepseek-coder",

})

const stream = await ollama.stream("what is golang?")

for await (const chunk of stream) {

process.stdout.write(chunk)

}

You can notice that the code is very similar to the previous one. In this new example, the LLM object (ollama) has a stream method. Run node index.mjs to get the answer to your question:

Improve the prompt

In a previous blog post about LangChain Python, "Prompts and Chains with Ollama and LangChain", I explained that LangChain has helpers to construct efficient prompts. The JavaScript version of LangChain also provides these helpers.

So, create a third directory with a new file index.mjs and add the below content in this file:

//import { Ollama } from "@langchain/community/llms/ollama";

import { ChatOllama } from "@langchain/community/chat_models/ollama"

import { StringOutputParser } from "@langchain/core/output_parsers"

import {

SystemMessagePromptTemplate,

HumanMessagePromptTemplate,

ChatPromptTemplate

} from "@langchain/core/prompts"

let ollama_base_url = process.env.OLLAMA_BASE_URL

const model = new ChatOllama({

baseUrl: ollama_base_url,

model: "deepseek-coder",

temperature: 0,

repeatPenalty: 1,

verbose: false

})

const prompt = ChatPromptTemplate.fromMessages([

SystemMessagePromptTemplate.fromTemplate(

`You are an expert in computer programming.

Please make friendly answer for the noobs.

Add source code examples if you can.

`

),

HumanMessagePromptTemplate.fromTemplate(

`I need a clear explanation regarding my {question}.

And, please, be structured with bullet points.

`

)

])

const outputParser = new StringOutputParser()

const chain = prompt.pipe(model).pipe(outputParser)

let stream = await chain.stream({

question: "what are structs in Golang?",

})

for await (const chunk of stream) {

process.stdout.write(chunk)

}

Some remarks about this source code:

I use

@langchain/community/chat_models/ollamainstead of@langchain/community/llms/ollama. The first package provides the core functionality for interacting with Ollama as a general-purpose LLM. The second package focuses explicitly on using Ollama as a chat model. It provides functionalities tailored for conversational interactions.@langchain/core/promptsprovides the tools to build "clever" promptYou can see that with

ChatPromptTemplate.fromMessagesI try to guide the most as possible the LLM.About

StringOutputParser, an output parser transforms an LLM's output into a more suitable format.StringOutputParseris a simple output parser that converts the output of a LLM into a string.And thanks to the pipe

methodI can construct a chain:const chain = prompt.pipe(model).pipe(outputParser). A chain in Langchain is like connecting Lego bricks. You link several components in a specific order, where the output of one becomes the input for the next. And in the end, the chain has astreammethod.

Again, run node index.mjs to get a detailed and straightforward answer to your question:

That's all for today. The following article will explain how to create an API with Fastify to stream LLM responses to an SPA application (and the front part will also be explained).

I wish you happy Sunday experiments.