Create a Web UI to use the GenAI streaming API

With LangChainJS, Ollama and Fastify, still on a Pi 5 (and propelled by 🐳 Docker Compose)

Table of contents

Today, we won't speak AI; we will develop a SPA (Single Page Application) to use the GenAPI we did in the former post: "GenAI streaming API with LangChainJS, Ollama and Fastify"

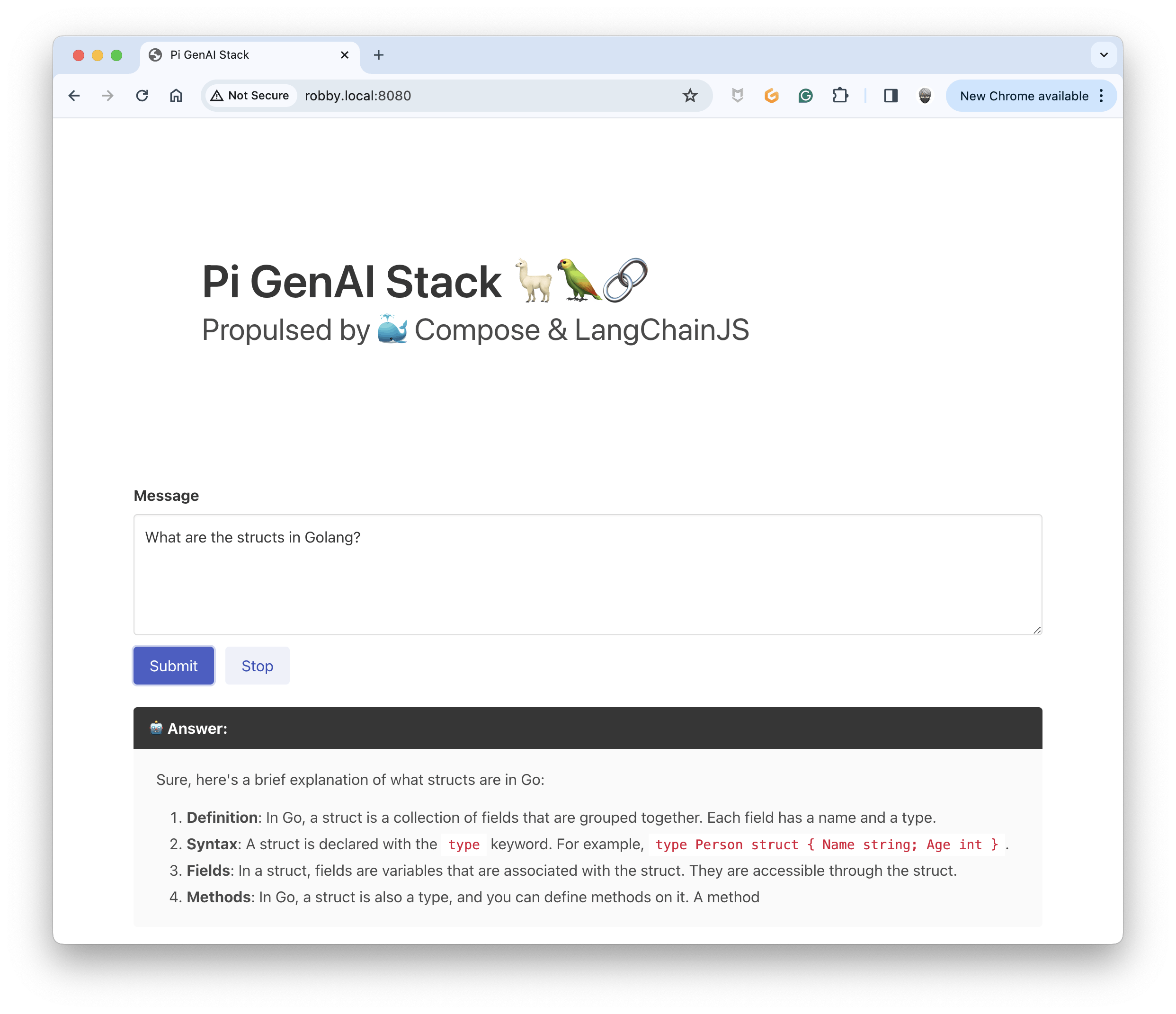

This is the final result:

I want:

A TextBox to type the question

A "Submit" button to send the question

A place to display the stream of the answer in a rich text format (we will use Markdown)

A "Stop" button to stop the stream

Web App setup

For the CSS part, I used Bulma (I downloaded the zip file, then unzip it, and kept the bulma.min.css file) (1)

For the Markdown(2) transformation (markdown to HTML), I used Marktown-It (I downloaded the markdown-it.min.js file from here: https://cdn.jsdelivr.net/npm/markdown-it@14.0.0/dist/markdown-it.min.js) (3)

(1) & (3): I choose to download the files for a matter of simplicity. In the real life you should use the appropriate installation method for each component.

(2) The DeepSeek Coder model uses markdown when it generates source code examples.

I created the following project tree:

.

├── index.mjs

└── public

├── css

│ └── bulma.min.css

├── index.html

└── js

└── markdown-it.min.js

index.mjsis the GenAI API application we developed in the previous blog post, so you only need to copy it to the project's root.index.htmlis the file that interests us today

Create the SPA

The most important thing is to understand how we will be able to post the question to the API and then handle the stream of the answer. It's pretty easy because the browser's fetch API can natively handle a stream: see this documentation: "Using readable streams."

So, I used it like this:

let aborter = new AbortController()

let responseText=""

try {

const response = await fetch("/prompt", {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({

question: txtPrompt.value

}),

signal: aborter.signal

})

const reader = response.body.getReader()

while (true) {

const { done, value } = await reader.read()

if (done) {

// Do something with last chunk of data then exit reader

// Empty the question text box

txtPrompt.value = ""

return

}

// Otherwise do something here to process current chunk

// Decode the value of the chunk

const decodedValue = new TextDecoder().decode(value)

// Add the decoded value to the responseText variable

responseText = responseText + decodedValue

// Transform the content of responseText to HTML

// Update the content of the txtResponse HTML object

txtResponse.innerHTML = markdownit().render(responseText)

}

} catch(error) {

if (error.name === 'AbortError') {

// Cancel the request (stop receiving the stream)

console.log("✋", "Fetch request aborted")

txtPrompt.value = ""

aborter = new AbortController()

} else {

console.log("😡", error)

}

}

In the end, the content of index.html is the following:

<!doctype html>

<html lang="en">

<head>

<title>Pi GenAI Stack</title>

<meta name="description" content="">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<meta http-equiv="x-ua-compatible" content="ie=edge">

<meta charset="utf-8">

<meta http-equiv="Cache-Control" content="no-cache, no-store, must-revalidate" />

<meta http-equiv="Pragma" content="no-cache" />

<meta http-equiv="Expires" content="0" />

<link rel="stylesheet" href="./css/bulma.min.css">

<script src="./js/markdown-it.min.js"></script>

</head>

<body>

<div class="container">

<section class="hero is-medium">

<div class="hero-body">

<p class="title is-1">

Pi GenAI Stack 🦙🦜🔗

</p>

<p class="subtitle is-3">

Propulsed by 🐳 Compose & LangChainJS

</p>

</div>

</section>

</div>

<div class="container">

<div class="field">

<label class="label">Message</label>

<div class="control">

<textarea id="txt_prompt" class="textarea" placeholder="Type your question here"></textarea>

</div>

</div>

<div class="content">

<div class="field is-grouped">

<div class="control">

<button id="btn_submit" class="button is-link">Submit</button>

</div>

<div class="control">

<button id="btn_stop" class="button is-link is-light">Stop</button>

</div>

</div>

</div>

<div class="content">

<article class="message is-dark">

<div class="message-header">

<p>🤖 Answer:</p>

</div>

<div id="txt_response" class="message-body">

</div>

</article>

</div>

</div>

<script type="module">

let btnSubmit = document.querySelector("#btn_submit")

let btnStop = document.querySelector("#btn_stop")

let txtPrompt = document.querySelector("#txt_prompt")

let txtResponse = document.querySelector("#txt_response")

let aborter = new AbortController()

btnSubmit.addEventListener("click", async _ => {

let responseText=""

try {

const response = await fetch("/prompt", {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({

question: txtPrompt.value

}),

signal: aborter.signal

})

const reader = response.body.getReader()

while (true) {

const { done, value } = await reader.read()

if (done) {

// Do something with last chunk of data then exit reader

txtPrompt.value = ""

return

}

// Otherwise do something here to process current chunk

const decodedValue = new TextDecoder().decode(value)

console.log(decodedValue)

responseText = responseText + decodedValue

txtResponse.innerHTML = markdownit().render(responseText)

}

} catch(error) {

if (error.name === 'AbortError') {

console.log("✋", "Fetch request aborted")

txtPrompt.value = ""

aborter = new AbortController()

} else {

console.log("😡", error)

}

}

})

btnStop.addEventListener("click", async _ => {

aborter.abort()

})

</script>

</body>

</html>

You can find all the source code here: https://github.com/bots-garden/pi-genai-stack/tree/main/js-dev-environment/workspace/samples/05-prompt-spa

Let's run the GenAI WebApp

Into the directory of the application, type the following command:

node index.mjs

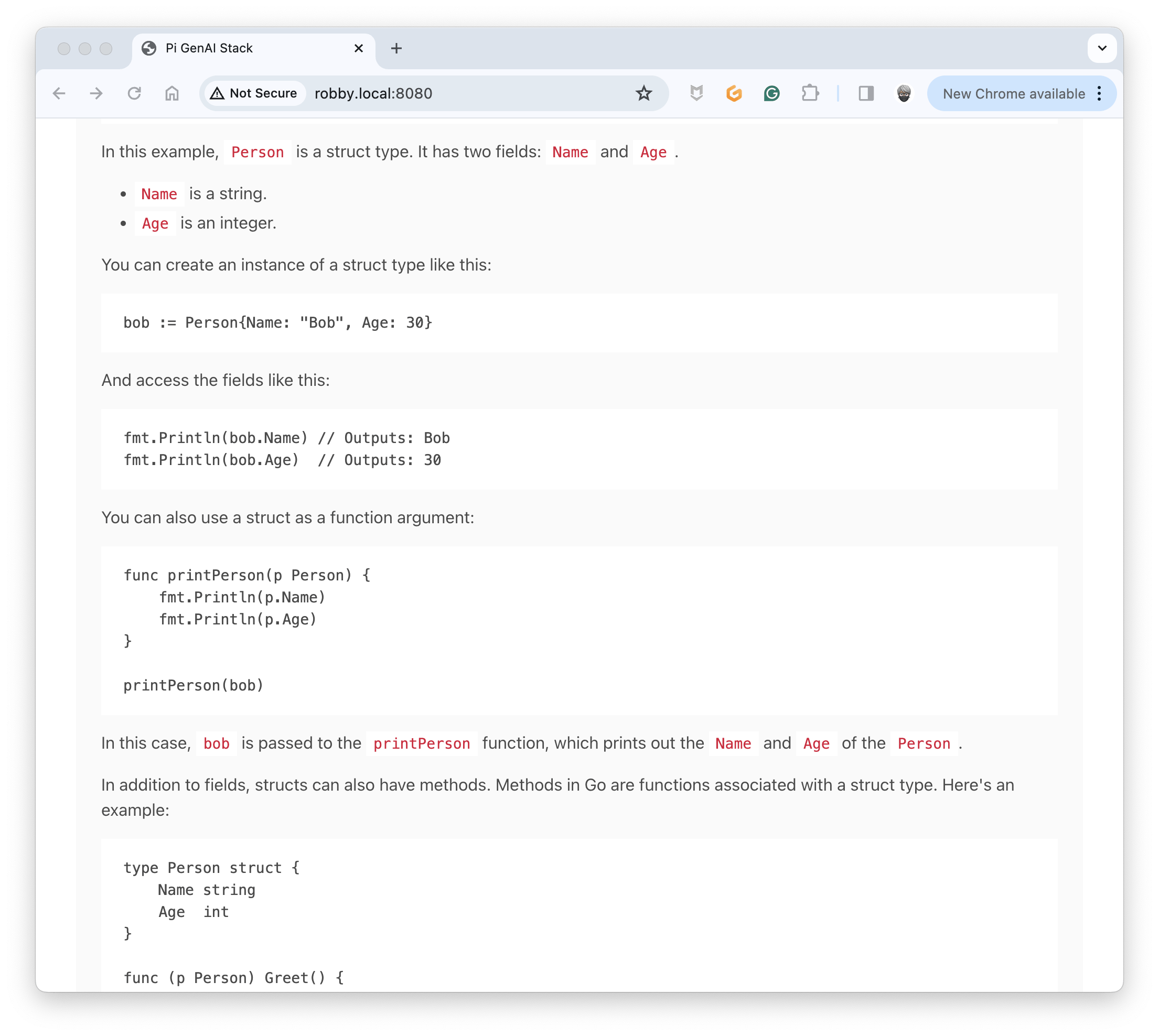

And now, you can query DeepSeek Coder from the Web UI:

That's it for today. In the next post, we will see how to add memory to our Chat Bot to have a real conversation.